Augmented reality navigation represents a fundamental evolution beyond traditional GPS systems, overlaying digital guidance directly onto real-world environments rather than forcing users to reference separate 2D maps displayed on phone screens. The global augmented reality navigation market, valued at $1.62 billion in 2024, is projected to reach $11.69 billion by 2030 at a compound annual growth rate of 25.35%, driven by accelerating 5G deployment, smartphone proliferation, and expanding enterprise applications. Unlike traditional GPS navigation that requires glancing at maps—diverting attention from driving, walking, or task execution—AR navigation systems use sensor fusion combining GPS, inertial measurement units (accelerometers, gyroscopes), cameras, and computer vision to display turn-by-turn directions, landmarks, points of interest, and real-time information directly overlaid on the physical world visible through smartphone cameras or head-mounted displays.

Google Maps Live View, the most widely deployed AR navigation system, already operates in major cities globally, projecting arrows and directions directly onto streets at complex intersections and transit junctions. Mapbox’s January 2025 partnership with Hyundai AutoEver unveiled next-generation 3D navigation with lane-level AR guidance and ADAS-enabled collision warnings for in-vehicle deployment. These are not experimental prototypes but commercial systems serving millions of users daily. The technology addresses a fundamental problem classical GPS navigation created: the cognitive load and safety risk of consulting maps on phone screens while navigating unfamiliar environments. AR navigation solves this by placing directional information exactly where users naturally look—on their actual surroundings.

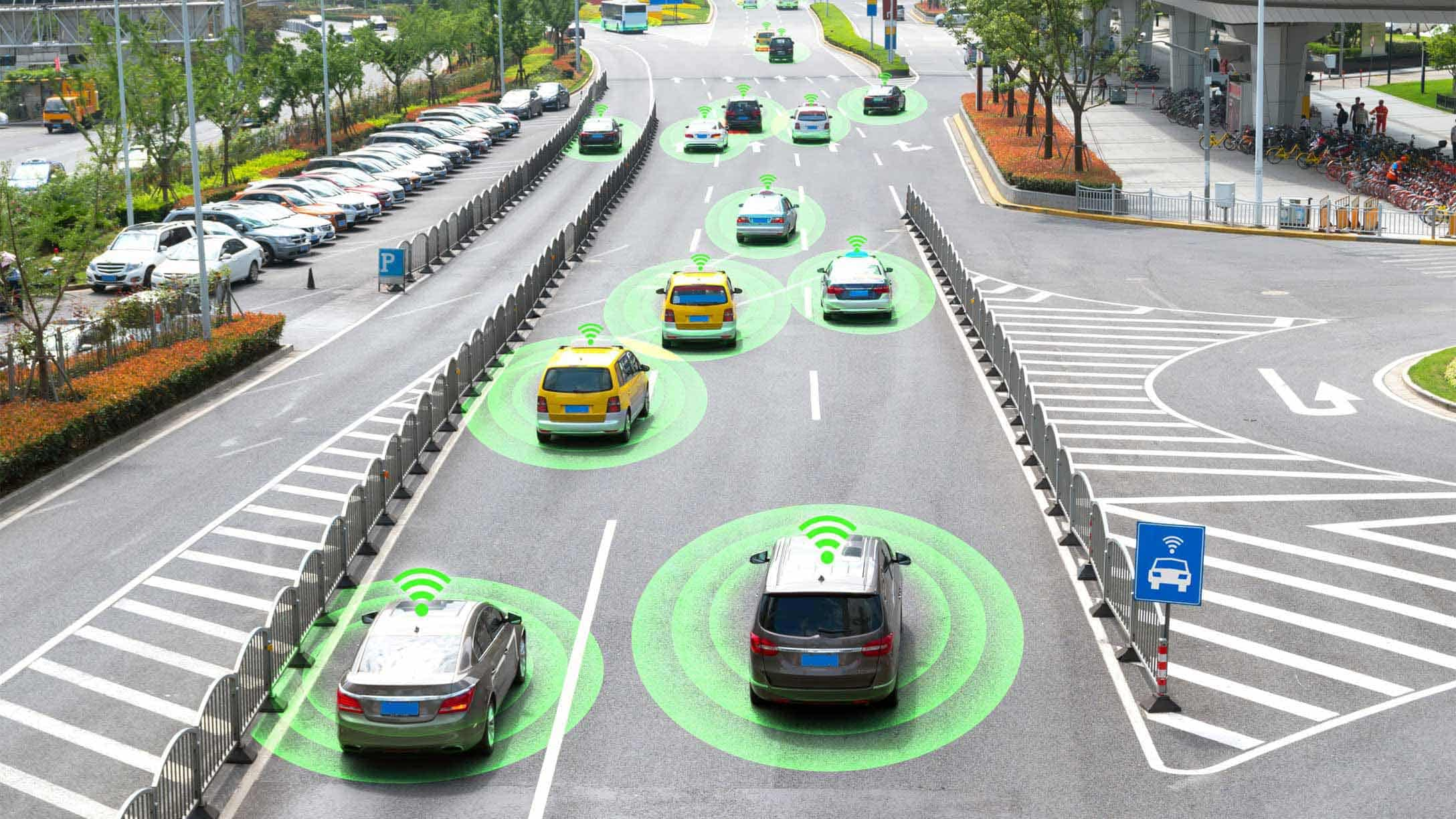

The implications extend far beyond consumer navigation convenience. Logistics companies use AR-guided indoor navigation to optimize warehouse picking routes, reducing fulfillment time by 20-30%. Retail stores deploy AR systems enabling customers to locate specific products within massive facilities without human assistance. Healthcare facilities guide patients through complex hospital layouts reducing anxiety and improving wayfinding efficiency. Autonomous vehicle development depends on AR context enrichment that supplements vehicle sensors with infrastructure-provided information about traffic conditions, construction, and environmental hazards. The convergence of maturing AR technology with 5G’s ultra-low latency and edge computing’s local processing capability is creating the technical foundation for AR navigation becoming the dominant interface through which humanity interacts with physical space.

The Problem: Why Traditional GPS Navigation Has Inherent Limitations

Traditional GPS navigation, revolutionary when deployed to consumer devices in the 2000s, suffers from fundamental limitations that AR navigation addresses directly.

The Attention Diversion Problem defines navigation with classical 2D maps. A driver relies on turn-by-turn guidance displayed on a phone or dashboard, requiring periodic glances away from the road to understand upcoming directions. For pedestrians navigating unfamiliar cities, consulting maps on phone screens consumes attention, reduces situational awareness, and increases vulnerability to traffic accidents, pickpocketing, and environmental obstacles. The cognitive burden of mentally rotating 2D map views to match the physical environment’s orientation (rotating the phone or mentally translating “northwest” to physical direction) creates friction that diminishes navigation usability.

The Precision Limitation constrains classical GPS navigation’s applicability. Standard civilian GPS operates at 5-10 meter accuracy—sufficient for identifying which street a destination lies on but insufficient for designating the specific entrance, the correct subway platform, or the precise location of a product on a shelf. Indoor environments prove completely inaccessible to GPS (signals cannot penetrate building structures). Shopping malls, airports, hospitals, warehouses, and office buildings—environments where wayfinding challenges are most acute—remain dark zones where traditional GPS navigation fails entirely.

The Real-Time Information Integration Constraint limits classical GPS navigation’s functionality. A 2D map application can display overlaid traffic or incidents, but the additional layers clutter the interface, making it difficult to perceive the actual path forward amidst visual complexity. Users constantly switch between apps—map application, messaging, restaurant information—rather than receiving integrated real-time information seamlessly combined with navigation guidance.

These limitations explain why augmented reality navigation represents not merely an incremental improvement but a fundamental paradigm shift in how humans navigate complex environments.

The Technical Foundation: Sensor Fusion and Precise Localization

AR navigation’s practical feasibility depends entirely on achieving and maintaining centimeter-level positioning accuracy—a dramatically more stringent requirement than traditional GPS’s 5-10 meter accuracy. This fundamental challenge required technical innovations across multiple domains.

Sensor Fusion Architecture combines complementary positioning technologies to achieve precision exceeding any single modality. GPS provides global positioning with 5-10 meter accuracy outdoors. Inertial measurement units (IMUs)—accelerometers, gyroscopes, magnetometers integrated into every smartphone—measure device movement, orientation, and heading with sub-millisecond responsiveness. Together, GPS supplies absolute position while IMU supplies precise relative movement, enabling the system to maintain position estimates even during brief GPS signal loss (urban canyons, covered streets). The fusion algorithm, often using Kalman filters for statistical optimization, combines these heterogeneous measurements into unified position estimates more accurate than either source independently.

Visual Positioning Technology addresses GPS’s fundamental indoor limitation. The smartphone camera continuously captures video of surroundings; computer vision algorithms match captured images against pre-built 3D maps of environments (called point clouds—millions of data points representing precise spatial geometry). This visual positioning system can localize a device to centimeter-level accuracy indoors without any GPS signal, enabling seamless navigation from outdoor streets through building interiors.

3D Mapping and Digital Twins create the reference databases enabling visual positioning. Rather than traditional 2D floor plans, AR navigation requires detailed 3D maps capturing building geometry with millimeter precision. Google Street View, LiDAR scanning, and crowdsourced mapping contribute to building comprehensive 3D replicas—”digital twins”—of physical environments. These digital twins, continuously updated as environments change through construction or renovation, form the foundation enabling AR systems to precisely locate users and register virtual information to match physical spaces.

Real-Time Processing Demands shaped the technical architecture. Decisions about overlaid information, environmental understanding, and position updates must occur sub-100-millisecond latencies to maintain visual coherence. If virtual navigation arrows take 200 milliseconds to respond to user movement, they noticeably lag behind actual motion, breaking the illusion of information anchored to physical space. This latency requirement drives deployment of edge computing—local processing on devices and nearby servers—rather than cloud-only processing that introduces unacceptable communication delays.

5G Integration enables scaling AR navigation beyond smartphones to vehicle displays, wearable AR glasses, and distributed robotics. Traditional 4G’s 50-100 millisecond latency proved marginal for some AR applications; 5G’s sub-10 millisecond latency and substantially higher bandwidth enable real-time streaming of high-fidelity AR content. The latency improvement is particularly critical for in-vehicle AR displays where processing remotely computed data with 100+ millisecond delays creates dangerous driving conditions.

Consumer AR Navigation Applications: From Maps to Guided Experiences

The practical deployment of AR navigation has already begun, with multiple consumer applications demonstrating the technology’s viability at scale.

Google Maps Live View represents the most mature consumer AR navigation system. Launched in select cities and continuously expanding, Live View uses ARCore (Google’s AR framework) combined with geospatial data to overlay directional arrows, street names, and landmarks directly onto the camera view of streets and intersections. Users approaching complex intersections, subway exits, or pedestrian junctions point their phone camera at the physical location, and the app displays large arrows indicating the correct path forward. The visual clarity eliminates ambiguity that 2D maps create; users never misinterpret directions because they see physical arrows pointing the actual direction to travel. Live View has proven particularly valuable at transit junctions where exiting a subway station creates spatial confusion—the system resolves ambiguity instantly through visual guidance.

AR City by Blippar demonstrates the tourist application of AR navigation. The system recognizes buildings and streets through computer vision, displaying interactive information about historical landmarks, restaurants, and attractions overlaid on the real environment. Instead of consulting guidebooks or searching websites for information about nearby attractions, tourists point their phone camera at buildings surrounding them and receive instant contextual information about what they’re viewing—breaking down the barrier between physical exploration and information access.

Sygic GPS Navigation integrates AR turn-by-turn directions with traditional mapping, enabling drivers to see virtual navigation paths projected onto the road ahead. For pedestrians, the system displays walking directions overlaid on the physical environment, eliminating the need to constantly reference a map app. The system’s real-time updates on traffic, road closures, and points of interest integrate seamlessly without app switching.

Mapbox 3D Live Navigation with Hyundai marks the technology’s transition to in-vehicle deployment. Unveiled at CES 2025, the system provides immersive lane-level guidance displaying the correct driving lane, turn instructions, and collision warnings with ADAS integration—safety features that traditional navigation couldn’t provide because they required understanding lane geometry and surrounding vehicles. This in-vehicle AR navigation represents the frontier where AR transitions from smartphone convenience to essential safety infrastructure.

Enterprise Applications: From Logistics to Retail to Healthcare

Where consumer AR navigation improves convenience, enterprise AR navigation drives measurable operational efficiency improvements.

Logistics and Warehouse Optimization demonstrates AR’s strongest business case. Distribution centers and warehouses containing hundreds of thousands of items create wayfinding challenges for both permanent staff and temporary workers. AR-guided picking routes, calculated to minimize walking distance while respecting inventory locations, reduce picking time by 20-30% compared to traditional paper-based or smartphone-based systems. The visual guidance—displaying on-screen where to walk and which shelf to access—eliminates the cognitive burden of decoding picking instructions on screens. Workers accumulate less fatigue, make fewer picking errors, and process higher order volumes. For companies operating razor-thin logistics margins, these efficiency improvements translate directly to profitability improvements.

Retail Store Navigation addresses a persistent problem: customers in large retail spaces (big-box stores covering 100,000+ square feet) cannot efficiently find specific products. AR navigation systems guide customers directly to products using visual pathfinding displayed on smartphone screens or projection on store floors. The efficiency gain—reducing search time from 5-10 minutes to 30-60 seconds—dramatically improves customer satisfaction while increasing store penetration of back-of-store products that customers might not discover through traditional browsing. Retailers gain valuable behavioral data about how customers navigate stores, enabling layout optimization.

Healthcare Facility Wayfinding uses AR to guide patients through complex hospital layouts. Large medical centers contain dozens of departments distributed across multiple floors and wings; patients often spend 20+ minutes trying to locate appointment locations, increasing stress and anxiety before medical procedures. AR wayfinding systems guide patients directly to correct departments and examination rooms, improving patient experience while reducing staff time spent answering directions. The accessibility benefits prove particularly significant: elderly and mobility-impaired patients can navigate independently with AR guidance rather than requiring escort assistance.

Indoor Airport Guidance represents a high-value use case. Airports present notorious wayfinding challenges: unfamiliar travelers must locate gates, security lines, transportation, restaurants, and restrooms within unfamiliar, complex terminal layouts. AR navigation guides travelers directly through optimal paths from entry to gates, reducing navigation anxiety while improving customer satisfaction. The system integrates real-time flight information and gate changes, automatically rerouting travelers when departure gates shift.

The Positioning Accuracy Spectrum: Different Solutions for Different Applications

AR navigation doesn’t rely on a single positioning technology but rather employs a spectrum of technologies matched to specific accuracy requirements and operational environments.

Standard GPS (5-10 meters accuracy) proves sufficient for outdoor urban navigation, directing pedestrians toward destinations, neighborhoods, and districts. This level of precision enables basic turn-by-turn walking navigation where meter-level accuracy is unnecessary—humans naturally perceive a range of viable paths within approximately 5-meter radius.

WiFi Triangulation (2-5 meters accuracy) augments GPS in urban canyons where tall buildings block satellite signals. WiFi access points throughout cities provide supplemental positioning, improving accuracy and coverage in challenging outdoor environments.

Bluetooth Beacons (1-3 meters accuracy) create precise indoor positioning when deployed in structured environments like retail stores, warehouses, and airports. The beacon’s limited range (30-100 meters) and precise distance measurement enable meter-level accuracy sufficient for wayfinding in indoor environments.

Ultra-Wideband (10-30 centimeters accuracy) represents an emerging technology providing centimeter-precision indoor positioning without requiring dense beacon networks. Ultra-wideband’s ability to measure sub-nanosecond time differences in signal propagation enables precision positioning comparable to RTK GPS but operates indoors where satellite signals fail.

DGPS/Differential GPS (0.3 meters accuracy) supplements standard GPS by broadcasting correction factors from ground stations, improving civilian accuracy tenfold. This technology, widely used in surveying and precision agriculture, provides sufficient accuracy for demanding applications requiring precise positioning but not millimeter precision.

Real-Time Kinematic GPS (0.005 meters/0.5 centimeters accuracy) achieves millimeter-level positioning through sophisticated signal processing and ground-station corrections. RTK GPS, while extraordinarily accurate, requires expensive infrastructure and processing, limiting applicability to high-precision applications like autonomous vehicle testing and surveying.

Visual Positioning (1-5 centimeters accuracy) using computer vision and 3D maps provides complementary positioning independent of GPS and radio signals. This approach enables seamless indoor positioning, high accuracy in challenging outdoor environments, and resilience against jamming or spoofing attacks.

This spectrum of technologies enables AR systems to optimize positioning accuracy to match application requirements, balancing accuracy demands against cost, power consumption, and computational complexity.

Architectural Evolution: From Smartphones to In-Vehicle to Wearables

The deployment architecture for AR navigation is diversifying as the technology matures, moving beyond smartphones to integrated vehicle systems and emerging wearable devices.

Smartphone AR Navigation represents the current dominant deployment model. Google Maps Live View, Blippar, Sygic, and other consumer applications leverage ubiquitous smartphone cameras, sensors, and processing power. The advantage is immediate global reach—billions of people already carry capable devices. The disadvantage is ergonomic: holding a smartphone up to face level for extended navigation creates arm fatigue and reduces situational awareness of actual surroundings beyond the camera view.

Head-Up Display (HUD) Integration addresses the smartphone disadvantage by projecting AR information onto vehicle windshields or transparent overlays visible to drivers. Information appears at eye level without requiring manipulation of devices, maintaining focus on the road. Premium automotive manufacturers increasingly integrate AR HUDs providing lane-level guidance, collision warnings, and environmental information directly in the driver’s natural line of sight.

Augmented Reality Glasses represent the emerging frontier where AR navigation becomes ubiquitous wearable technology. AR glasses providing continuous visual augmentation of the physical world would enable pedestrians, cyclists, and drivers to access navigation guidance, environmental information, and real-time alerts without any device manipulation. Technical challenges—battery life (multi-hour continuous operation on lightweight glasses), acceptable weight/comfort, optical clarity, and privacy concerns—remain substantial. However, companies including Meta, Microsoft (HoloLens), and Magic Leap are advancing toward mass-market deployable solutions.

In-Vehicle Dashboard Displays integrate AR navigation into vehicle information systems, displaying 3D navigation visualizations on dashboard screens, head-up displays, and instrument clusters. Mapbox’s 2025 Hyundai partnership exemplifies this direction, providing lane-level guidance and collision warnings through automotive-grade AR systems.

This architectural diversification suggests that AR navigation will eventually span entire spectrums from smartphones to glasses to in-vehicle systems, with information synchronized and optimized for each interface while maintaining unified underlying navigation models.

Market Growth and Regional Adoption Patterns

The AR navigation market exhibits dramatic regional variation in adoption trajectories reflecting different economic structures, technology infrastructure, and use case emphasis.

North America leads in consumer and automotive AR navigation adoption, driven by smartphone penetration, strong automotive industry integration, and logistics sector emphasis. Enterprise adoption focuses on warehouse and retail applications where labor cost efficiency drives adoption decisions. The U.S. market’s competitive intensity—Google, Apple, Amazon, and dozens of startups competing—accelerates innovation and deployment velocity.

Europe emphasizes privacy-respecting AR navigation deployments, with GDPR compliance requirements shaping system architecture and data handling practices. Enterprise adoption focuses on healthcare and smart city applications where public institutions drive adoption. The presence of strong mapping companies (HERE, TomTom) creates competitive alternative ecosystems.

Asia Pacific exhibits the fastest growth trajectory, with projected compound annual growth rates exceeding 42% through 2030. The growth drivers include rapid urbanization in China, India, and Southeast Asia; massive smart city projects particularly in China deploying AR navigation at urban scale; and strong consumer technology adoption. Baidu, Alibaba, and other Chinese tech giants are deploying AR navigation systems as integral components of broader digital ecosystem strategies.

Japan and South Korea demonstrate sophisticated technology adoption where AR navigation integration into vehicles and consumer applications advances rapidly due to consumer technology enthusiasm and automotive manufacturing excellence.

Technical Challenges and the Path Toward Resolution

Despite compelling applications and market opportunity, AR navigation technology faces persistent technical challenges requiring continued innovation.

Tracking Stability and Jitter remains problematic in early deployments. AR objects displayed on smartphone screens can appear to flicker, drift, or misalign with physical locations when tracking deviates slightly from ground truth. Environmental inconsistencies—varying lighting conditions, reflections, dynamic movement of people and vehicles—introduce tracking noise that manifests as visually disconcerting AR element instability. Solutions involve AI-driven object recognition that dynamically adjusts rendering based on environmental conditions, cloud-based AR processing improving rendering precision, and optimized frame rates (60+ fps) reducing jitter perception.

Battery Drain and Processing Demands create practical usability limitations. Continuous GPS/sensor operation combined with intensive camera processing and AR rendering drains batteries rapidly, limiting session length to 2-3 hours on contemporary smartphones. Power management strategies including local processing (reducing cloud communication), efficient algorithms, and hardware acceleration help mitigate the problem, but fundamental tradeoff between capability and power consumption persists.

Indoor Positioning Accuracy requires substantial infrastructure investment. Visual positioning systems demand pre-built 3D maps of interior spaces; WiFi/Bluetooth beacon deployment requires installation and maintenance. Unlike outdoor GPS (infrastructure funded publicly as government service), indoor AR navigation requires private investment for each building or complex. This economic barrier slows indoor AR deployment compared to outdoor applications.

Environmental Variation and Model Generalization create challenges for visual positioning systems. Maps trained in summer conditions may fail in winter snow when familiar landmarks appear transformed. Low-light conditions degrade camera-based positioning. Unusual weather (heavy rain obscuring visual features) disrupts visual tracking. Solutions involve training diverse models on varied environmental conditions and sensor fusion enabling fallback to complementary positioning modalities.

Privacy and Surveillance Concerns introduce regulatory and social barriers. Continuous location tracking enables detailed behavioral surveillance that many users rightfully regard as privacy violations. Regulatory frameworks like GDPR constrain data collection and retention, while user privacy expectations require transparent data handling and effective anonymization. Balancing personalization (enabled by understanding user behaviors) against privacy protection requires sophisticated data governance.

The Future: From Smartphone Apps to Ubiquitous Spatial Computing

The trajectory of AR navigation points toward a future where augmented reality becomes the dominant interface through which humans perceive and navigate physical space.

5G and Beyond infrastructure deployment removes real-time latency constraints that currently limit AR responsiveness. 5G’s sub-10-millisecond latency and faster bandwidth enable streaming high-fidelity AR content, cloud-based processing of complex computer vision tasks, and real-time synchronization with server-side information. 6G research promises even lower latency and higher bandwidth, creating increasingly powerful infrastructure supporting ubiquitous AR experiences.

Digital Twins at Global Scale represent the long-term vision: complete 3D replicas of Earth’s surface and built environments, continuously updated as physical reality changes. This digital twin layer, accessible via AR interfaces, would enable unprecedented navigation capabilities, environmental simulation, and spatial data analysis. Companies including Google, Apple, and others are investing in building components of this vision through Street View, mapping infrastructure, and 3D reconstruction technology.

AI-Powered Contextual Intelligence will enable AR navigation to become increasingly sophisticated in understanding user intent and providing relevant guidance. Rather than simply displaying turn-by-turn directions, future AR systems might anticipate user needs—automatically displaying restaurant options when detecting user fatigue during travel, highlighting hazards based on environmental understanding, or suggesting activities based on location and interest profile.

Wearable AR Glasses will eventually replace smartphones as the primary interface for AR navigation. Lightweight glasses providing continuous visual augmentation, updated as users move through environments, will enable seamless access to navigation and environmental information without any device manipulation. The transition requires solving battery life, weight, optical clarity, and privacy concerns—formidable but ultimately solvable technical challenges.

Autonomous Vehicle Integration depends fundamentally on AR navigation providing context that vehicle sensors alone cannot determine. Infrastructure-provided information about road conditions, traffic regulations, emergency vehicle presence, and environmental hazards enriches the autonomous system’s understanding of its operational environment, improving safety and enabling more complex scenarios than onboard sensors can handle.

Conclusion: Navigation as Gateway to Spatial Computing

Augmented reality navigation represents far more than a cosmetic improvement over traditional 2D maps. It fundamentally restructures the relationship between humans and physical space, transforming navigation from an information access problem (consulting separate maps) to direct visual augmentation of perception itself.

The technology is maturing rapidly: Google Maps Live View operates in major cities globally, Mapbox’s automotive integration with Hyundai demonstrates in-vehicle deployment, and enterprise applications in logistics, retail, and healthcare validate operational benefits. The market, valued at $1.62 billion in 2024, is projected to reach $11.69 billion by 2030 at 25.35% compound annual growth rate, driven by 5G deployment enabling real-time AR experiences, expanding smartphone and wearable device adoption, and compelling business cases across enterprise sectors.

The technical challenges are surmountable through continued innovation: tracking stability improving through AI and cloud processing, positioning accuracy expanding across indoor/outdoor environments through sensor fusion, battery efficiency improving through hardware acceleration and algorithm optimization, and privacy protection strengthening through robust data governance frameworks.

The path forward leads toward ubiquitous augmented reality where spatial information becomes as accessible and natural as human perception itself. AR glasses providing continuous visual augmentation, digital twins of Earth’s environments enabling precise navigation anywhere, and AI-powered contextual intelligence anticipating user needs will gradually replace the separate “navigation app” paradigm with seamless spatial computing integrated into how humans perceive and interact with the physical world.

For individual users, this means navigation becoming intuitive, accessible, and invisible—guidance appearing exactly where needed without conscious effort. For enterprises, AR navigation drives operational efficiency improvements, customer experience enhancement, and data insights enabling continuous optimization. For society, ubiquitous spatial computing enabled by AR navigation creates possibilities for accessibility (enabling independent navigation for people with disabilities), safety (reducing accidents through better information), and environmental awareness (seeing real-time information about environmental conditions and impacts).

The next step in GPS technology is not another generation of satellites or signal processing algorithms. It is the complete transformation of navigation from a separate functional domain into the perceptual fabric through which humans understand and move through physical space. Augmented reality navigation is that transformation.